|

| Thursday, 9 December 2021, 10:17 JST | |

| |  | |

Source: Fujitsu Ltd | |

|

|

|

Cambridge, Massachusetts and Tokyo, Dec 9, 2021 - (JCN Newswire) - Fujitsu Limited and the Center for Brains, Minds and Machines (CBMM) headquartered at the Massachusetts Institute of Technology (MIT) have achieved an important milestone in a joint initiative to deliver improvements in the accuracy of artificial intelligence (AI) models. The results of the research collaboration between Fujitsu and CBMM are published in a paper discussing computational principles that draw inspiration from neuroscience to enable AI models to recognize unseen (out-of-distribution, OOD) data (1) that deviates from the original training data. Highlights of the paper will be presented at the NeurIPS 2021 (Conference on Neural Information Processing Systems) (2), showing improvements in the accuracy of AI models.

| | Principles that enable AI to achieve high recognition accuracy of OOD data by utilizing an original index indicating the degree of image recognition of AI |

The advent of deep neural networks (DNNs) in recent years has contributed to an increasing variety of real-world applications for AI and machine learning technologies, including for tasks like defect detection for the manufacturing industry and diagnostic imaging in the medical field. While these AI models can at times demonstrate performance equal to or better than that of humans, challenges remain as recognition accuracy tends to deteriorate when environmental conditions like lighting and perspective significantly differ from those in datasets used during the training process.

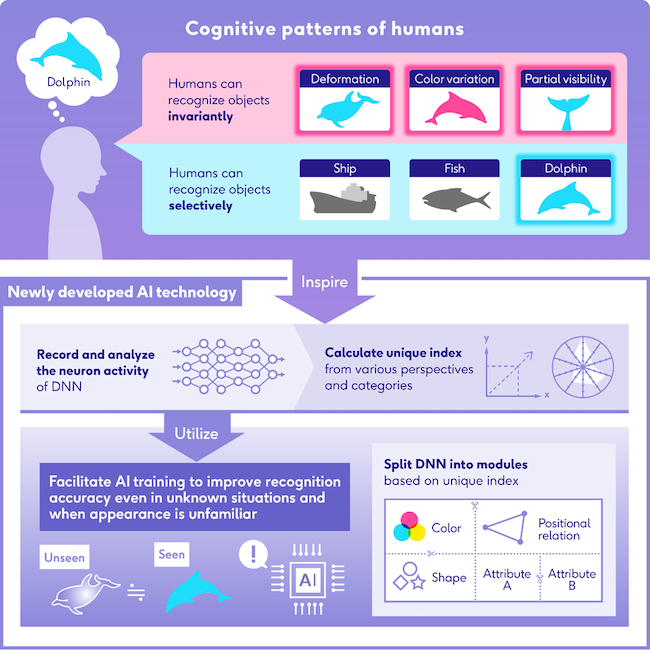

To resolve this, researchers at Fujitsu and CBMM have made collaborative progress in understanding AI principles enabling recognition of OOD data with high accuracy by dividing the DNN into modules - for example shape and color, amongst other attributes - taking a unique approach inspired by the cognitive characteristics of humans and the structure of the brain. An AI model using this process was rated as the most accurate in an evaluation measuring image recognition accuracy against the "CLEVR-CoGenT" benchmark (3), as shown in the paper presented by the group at NeurIPS.

Dr. Seishi Okamoto, Fellow at Fujitsu Limited commented, "Since 2019, Fujitsu has engaged in joint research with MIT?s CBMM to deepen our understanding of how the human brain synthesizes information to generate intelligent behavior, pursuing how to realize such intelligence as AI and leveraging this knowledge that contributes to solving problems facing a variety of industries and society at large. This achievement marks a major milestone for the future development of AI technology that could deliver a new tool for training models that can respond flexibly to different situations and recognize even unknown data that differs considerably from the original training data with high accuracy, and we look forward to the exciting real-world possibilities this opens up."

Dr. Tomaso Poggio, the Eugene McDermott Professor at the Department of Brain and Cognitive Sciences at MIT and Director of the Center for Brains, Minds and Machines, remarked, "There is a significant gap between DNNs and humans when evaluated in out-of-distribution conditions, which severely compromises AI applications, especially in terms of their safety and fairness. Research inspired by neuroscience may lead to novel technologies capable of overcoming dataset bias. The results obtained so far in this research program are a good step in this direction."

Future possible applications may include AI for monitoring traffic that can respond to changes in various observation conditions and a diagnostic medical imaging AI that can correctly recognize different types of lesions.

About the New Method

Research findings focus on the fact that the human brain can precisely capture and classify visual information, even if there are differences in shapes and colors of the objects we perceive. The new method calculates a unique index based on the way an object is perceived by neurons and how the DNN classifies the input images. The model encourages the increase of the index in order to improve recognizing OOD example objects more effectively.

Up to now it was assumed that the best method to create an AI model with high recognition accuracy was to train the DNN as a single module without splitting it up. However, by splitting the DNN into separate modules depending on shapes, colors, and other attributes of the objects based on the newly developed index, researchers at Fujitsu and CBMM have successfully achieved higher recognition accuracy.

Future Plans

Fujitsu and CBMM hope to further refine the findings to develop an AI able to make human-like flexible judgments with the aim to apply it in various areas like manufacturing and medical care.

(1) OOD data:

Data substantially different from the data seen during the AI training.

(2 Presented at NeurIPS:

"How Modular Should Neural Module Networks Be for Systematic Generalization?"; Planned presentation date and time: December 8, 4:30 PM PST/ December 9, 2021 9:30 AM JST

Presenters: Vanessa D'Amario (Massachusetts Institute of Technology), Tomotake Sasaki (Fujitsu) and Xavier Boix (Massachusetts Institute of Technology) https://neurips.cc/Conferences/2021/Schedule?showEvent=26740

(3) CLEVR-CoGenT dataset:

A benchmark developed by Stanford University to measure an AI's ability to recognize new combinations of objects and attributes.

https://cs.stanford.edu/people/jcjohns/clevr/

About Fujitsu

Fujitsu is the leading Japanese information and communication technology (ICT) company offering a full range of technology products, solutions and services. Approximately 126,000 Fujitsu people support customers in more than 100 countries. We use our experience and the power of ICT to shape the future of society with our customers. Fujitsu Limited (TSE:6702) reported consolidated revenues of 3.6 trillion yen (US$34 billion) for the fiscal year ended March 31, 2021. For more information, please see www.fujitsu.com.

About the Center for Brains, Minds, and Machines at MIT

A multi-institutional NSF Science and Technology Center headquartered at MIT, which is dedicated to developing a computationally based understanding of human intelligence and establishing an engineering practice based on that understanding. CBMM brings together computer scientists, cognitive scientists, and neuroscientists to create a new field--the Science and Engineering of Intelligence.

This work was supported in part by the Center for Brains, Minds and Machines (CBMM), funded by NSF STC award CCF - 1231216.

Topic: Press release summary

Source: Fujitsu Ltd

Sectors: Enterprise IT, Artificial Intel [AI], MedTech

https://www.acnnewswire.com

From the Asia Corporate News Network

Copyright © 2026 ACN Newswire. All rights reserved. A division of Asia Corporate News Network.

|

|

|

|

|

|

|

|